Confession: I love The Big Bang Theory and thanks to TiVo, I'm working on watching all 279 episodes, in order.

But in just about every episode, there's this stuff that makes my physics major brain hurt a bit:

Reader, as Wolfgang Pauli allegedly said: "That is not only not right; it is not even wrong." Electrons are not shiny balls; they do not orbit atomic nuclei on shiny rails. (They don't make whooshing sounds, either, but that's even more of a quibble.)

Now: at a certain level, attempting to visualize what atoms "really look like" is futile. It's just math down there, solutions to the Schrödinger equation, or some other formulation.

But they could at least try to get the scale right.

Or, more accurately, once you try to get the scale right, you can see why they didn't.

Let's imagine—because we're not going to actually do it—building a scale model of a good old water molecule, H2O: an oxygen atom, with two hydrogen atoms hanging off to one side.

A hydrogen atom nucleus, a single proton, has a radius "root mean square charge radius") 8.4e-16 meters.

Let's say our scale model uses a ping-pong ball to represent a proton. A ping-pong ball's radius is 20 millimeters, or 2.0e-2 meters. (Fascinating fun fact from the link: "the size increased from 38mm to 40mm after the 2000 Olympic Games." I did not know that!)

So for our scale model, we have to multiply atomic/molecular distances by a scale factor of (2.0e-2/8.4e-16) = 2.38e13 (I.e., just under 24 trillion.)

The radius of an oxygen atom's nucleus is generally reported to be 2.8e-15 meters. Multiplying this by our scale factor, gives 2.8e-15 * 2.38e13 = 6.7e-2 meters, or 67 millimeters, about the size of a medium grapefruit.

So: to start building our scale model, gather together a grapefruit and two ping-pong balls. Where do we put them?

This page reports the distance between the oxygen nucleus and the hydrogen nuclei is 0.943 angstroms, or 9.43e-11 meters.

This scales up to 9.43e-11 * 2.38e13 = 2244 meters. Or about 1.4 miles. So:

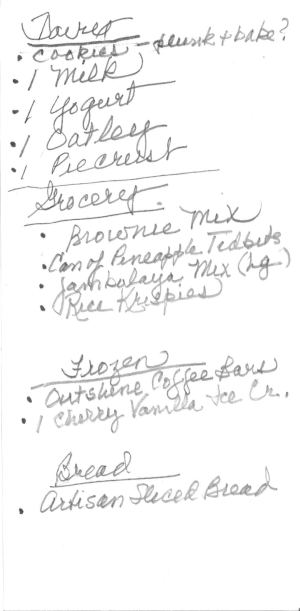

- Put your grapefruit down;

- Walk 1.4 miles in a straight line;

- Drop one ping-pong ball;

- Return to the grapefruit;

- Turn approximately 106° from your original direction;

- march another 1.4 miles, and drop your other ping-pong ball.

That completes placement of the nuclei. Now we have to consider the electrons (ten of them) that swarm around the nuclei. Where do they go, and how do we represent them?

Reader, the best thing I can think up, visualization-wise, is a fuzzy cloud. That Schrödinger equation thing I referred to above would (if we solved it) give us a probability of finding an electron within a certain hunk of space. That probability is relatively high close to the nuclei, and gets much smaller as you get further away. And, as an added complication, the electrons have a higher probability to flock around the oxygen nucleus than the hydrogen nuclei. Visualize that however you'd like. The page referenced above does it with color.

The page referenced above talks about the "Van der Waals" diameter of the water molecule, which is as good a size estimate as we are likely to get; that's about 2.75e-10 meters. Scaling that distance up gives 2.8e-10 * 2.38e13 = 6664 meters, or about 4.14 miles.

So, to summarize: our scale model water molecule is a fuzzy cloud over 4 miles in diameter, in which is hiding a grapefruit and two ping-pong balls. And I admit, this would be difficult to picture on the TV screen in a way that might appeal to viewers. Still, it would be better than what they did.

Another fun fact: the electrons make up only 0.03% of the mass of the water molecule. For your typical Poland Spring 500 milliliter (16.9 oz) water bottle, that means most of the volume is those fuzzy electrons. Their total mass, however, is a mere 150 milligrams or so; the remaining 499.85 grams resides in those tiny nuclei.

Here's one thing that does not scale well. How fast do water molecules typically move? Much faster than the "atoms" you see on The Big Bang Theory. Googling will tell you that they exhibit a range of speeds (a Maxwell-Boltzmann distribution, approximately) and for water molecules at (roughly) room temperature, the average speed works out to 590 meters/sec (≈1300 mph).

So be glad that the water molecules in that Poland Spring bottle don't suddenly ("at random") decide to start moving in the same direction.

Yeah, that's impossible. Conservation of momentum saves us there.

But scaling that to our model, we get 1.4e16 meters/sec.

Which is (um) 47 million times the speed of light.

Very difficult to visualize!

![[Amazon Link]](/ps/asin_imgs/B07QSZ9DWN.jpg)

![[Amazon Link]](/ps/asin_imgs/B07JGFJHXM.jpg)

![[The Blogger and His Dog]](/ps/images/me_with_barney.jpg)